reduce the number of Redis operations during invalidation.We chose to rework the cache invalidation logic to something that would scale with an increased number of users. multiple redis databases and/or different redis data structure

#Datagrip redis how to#

We had couple of options on how to deal with the invalidation issue: Thankfully, our DevOps guys already added monitoring of large Redis keys. And that’s not even mentioning the size of the values stored in Redis, which would also add time overhead if left unchecked. To illustrate: if each scan operation in Redis would take 1 ms (value pulled out of a hat), the invalidation would add 300 ms (scans use token pagination and thus must be done sequentially) to the API response (when request is finished, we guarantee to clients that old data is flushed from cache). We ran into issues where we had around 300k keys in Redis - so for each invalidation, we had to perform 300 SCAN requests only to remove a handful of keys (20 max).įrom our tracing we could see that a substantial amount of time was spent on the scanning operations. With new users and/or more keys, Redis will have to do more work. This works fine for a smaller number of keys in Redis, but it does not scale.įor example if we have 20 cached keys for one user, we scan with a count of 1000 and we have 1000 users – we will have to perform 20 SCAN requests to get through the keyspace ((20keys/user * 1000 users) / (1000 count)). This essentially means that the whole keyspace will get scanned no matter how many keys will get matched and returned to the client. the MATCH filter is applied after elements are retrieved from the collection, just before returning data to the client. But there is a Redis implementation detail with SCAN command and the MATCH option that could trip somebody up. Now this works fine and is easy to understand. Also it’s the recommended way to find a subset of keys in the keyspace (another option includes KEYS command - but its use in production setting is discouraged by Redis). This command scans through the whole keyspace.

It was running SCAN command with MATCH option for a particular user (e.g. The initial implementation done was fairly simple.

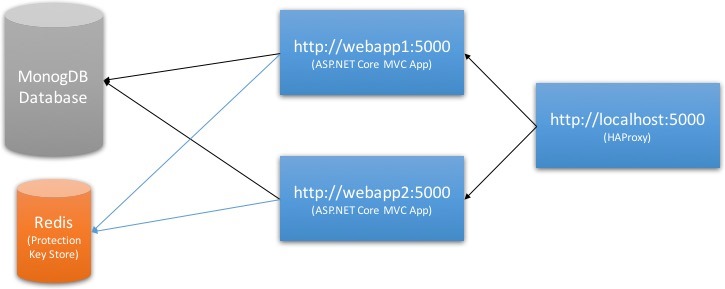

user’s settings change), we have to invalidate the cache of multiple responses at once. The key design for user cache conforms to the following design: We have all keys in one Redis database, using the GET/SET commands as expected. We cache the responses (with a time-to-live ( TTL)), so that each subsequent request for the same content gets the response as fast as possible and without increasing load on the main SQL database. One of our projects deals with a high degree of personalization where we need to serve highly personalized data through the API. In this post, I will provide a story of how we reduced Redis usage during cache invalidation, and I will give a small deep dive on Redis advanced features, namely sorted sets. Though due to its ease of use it’s easy to overlook performance bottlenecks. Its speed, simplicity, tooling and cloud support are all arguments for using it. We have been using Redis as a caching layer, and we have become fond of this technology.

0 kommentar(er)

0 kommentar(er)